Kimi K2.5 is now live as a single unified model across the Kimi App, Kimi.com, Kimi Code, and the Kimi API, with both Instant and Thinking modes supported. Agent swarm capabilities are available through Swarm Mode on Kimi.com, currently accessible to Allegretto-tier users and above.

The model is designed as an all-in-one system built on a native multimodal architecture, unifying visual understanding, reasoning, coding, and agentic execution within a single operational framework.

The model is also accessible via third-party provider platforms, including build.nvidia.com and Fireworks, extending deployment flexibility for organizations that prefer existing AI infrastructure and tooling ecosystems.

This broad availability underscores Moonshot AI’s intent to position Kimi K2.5 as an operational model designed for real-world integration across development, product, and enterprise workflows.

“We aim to turn your ideas into products and your data into insights, while minimizing technical friction,” said Zhilin Yang, Founder and CEO of Kimi, in the release video for the new model.

A New Class of Open-Source Intelligence

Kimi K2.5 is a native multimodal agentic model trained on approximately 15 trillion mixed visual and text tokens, meaning it processes images, video, and text as first-class inputs, not afterthoughts.

Native integration enables coherent reasoning across modes, a critical requirement for real-world workflows where context isn’t purely verbal.

Traditionally, cutting-edge AI has been dominated by closed ecosystems controlled by major U.S. hyperscalers.

That ‘walled garden’ model limited flexibility and forced organizations into vendor lock-in. However, open-source alternatives are rapidly gaining traction since they foster transparency, extensibility, and deep customization.

Enterprises can now access a single model capable of interpreting complex visual designs and turning them into production-ready code, inspecting interface renders and fixing visual inconsistencies autonomously.

Why Vision-Enabled Coding Matters

One of Kimi K2.5’s standout capabilities is vision-based coding. Traditional AI coding assistants excel at text-to-code tasks but struggle when context lives in visual artifacts such as screenshots, design mockups, or video walkthroughs.

Kimi K2.5 blurs that boundary: it can generate front-end code from visual representations, debug layout issues by inspecting rendered outputs, and generally reason about code with a visual understanding that mimics human developers.

This matters in three ways for enterprises:

1. Speed

Translating design assets into functional code manually is time-intensive and error-prone. Vision coding reduces that friction, collapsing weeks of iteration into minutes.

2. Collaboration

Product, design, and engineering teams speak different languages. A multimodal model bridges those gaps without heavy tooling overhead.

3. Innovation

Code generation that understands visual context unlocks new UI/UX-centric automation that traditional LLMs can’t handle.

In an industry where speed and alignment determine market leadership, these improvements aren’t incremental. They’re foundational.

From Single Agents to Agent Swarms

Agentic AI has become shorthand for systems that act autonomously on complex workflows rather than merely respond to prompts.

The real shift in Kimi K2.5 is its embrace of the agent swarm paradigm. Instead of relying on a single autonomous agent to tackle a task end-to-end, the model can spawn up to 100 sub-agents that coordinate and execute in parallel across hundreds or thousands of steps.

Think of it less like a lone general and more like an interconnected team of specialists:

- Research agent dives into domain knowledge.

- Code generation agent writes functional modules.

- Verification agent checks for logical soundness.

- Visual debugging agent inspects outputs for UI fidelity.

This distributed intelligence approach mirrors real organizational roles but operates orders of magnitude faster. Tasks like converting a 30,000-word research report into a polished investor pitch deck can now happen in minutes via simple chat interactions.

That kind of capability has meaningful ROI implications for knowledge work, product development, and analytical workflows where human bottlenecks are the default constraint.

Where K2.5 Fits in the Open Source Firmament

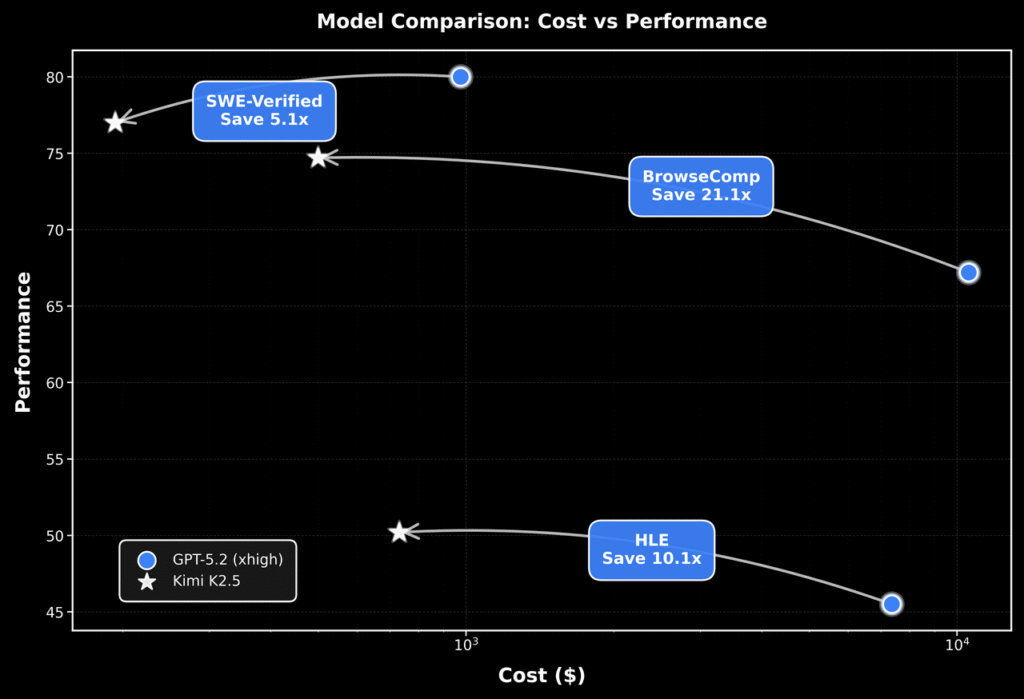

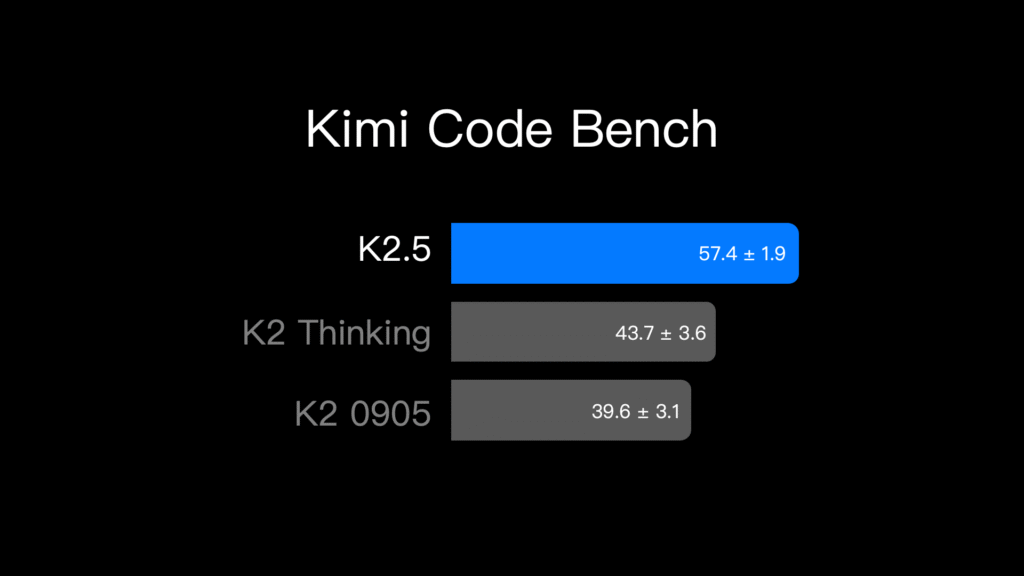

Evaluating Kimi K2.5’s contribution requires situating it among broader open-source AI trends. In 2025 and 2026, open models have steadily improved across reasoning, coding, and multimodal benchmarks, narrowing the gap with proprietary offerings.

Top contenders like Qwen3, Llama 4 variants, and DeepSeek have all made strides, but many lack the agentic orchestration focus that K2.5 foregrounds.

This distinction matters. Benchmarks often measure static capabilities – e.g., reasoning accuracy or code quality.

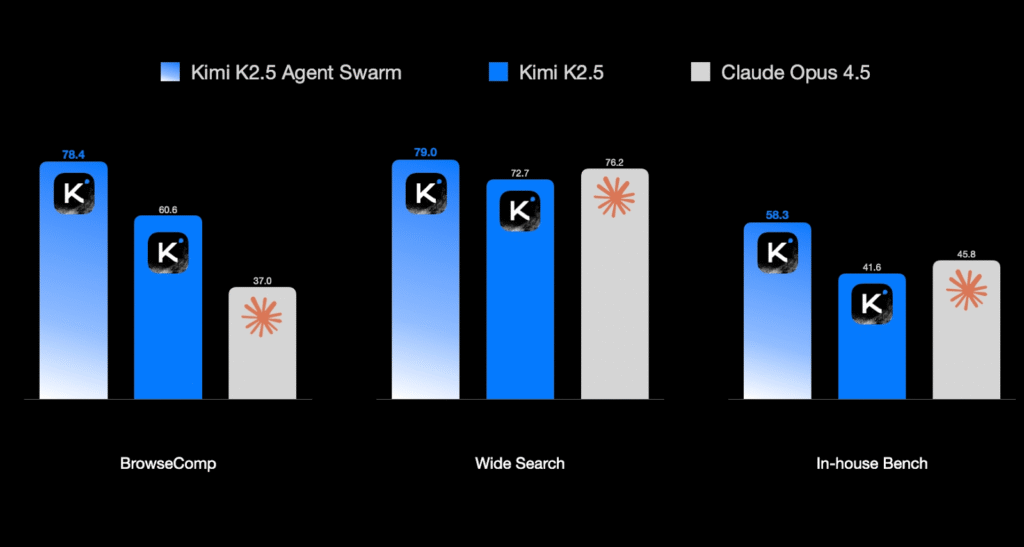

Kimi K2.5’s benchmark results are based on rigorously defined testing conditions, with explicit disclosure of reasoning modes, tool usage, context management, and evaluation limits across competing models.

Agentic models, by contrast, are evaluated by their ability to operate, orchestrate, and complete multistep tasks under real-world time constraints. That’s a different metric altogether, and Kimi K2.5’s architecture explicitly prioritizes it.

Proprietary models from major labs continue to excel at raw reasoning benchmarks, and many enterprise workflows demand strict compliance, explainability, and safety frameworks that open-source AI must adapt to. Open-source does not automatically equal enterprise-ready.

Adoption, Integration, and Enterprise Considerations

From a decision-maker perspective, Kimi K2.5’s strengths are meaningful but not plug-and-play. Its open-source nature brings both opportunity and complexity:

Kimi K2.5 creates a clear opportunity for enterprises to rethink how open-source AI can be used for scalable, multimodal automation across knowledge and development workflows.

Customizability

Enterprises can tailor the model to domain-specific data and workflows without vendor lock-in.

Transparency

Open weights and architecture facilitate auditing, compliance, and security reviews.

Cost Control

Organizations can deploy models on-premises or via cloud infrastructure of their choice, optimizing for cost and performance.

The shift to agent swarms also increases complexity, requiring enterprises to manage coordination, accountability, and risk across distributed AI workflows.

Governance

Open-source models require robust guardrails to ensure compliance with internal policies and external regulations.

Integration

Swarm intelligence unlocks parallel execution, but orchestration infrastructure must handle scheduling, error coherence, and resource contention.

Talent

Effective deployment demands teams skilled in AI engineering and model customization, a resource many firms still lack.

These aren’t theoretical trade-offs. They reflect the hard choices enterprise leaders face when adopting bleeding-edge AI.

“This gap between technical capability and operational impact is well documented in enterprise AI adoption. As Satya Nadella, CEO of Microsoft, has noted, “The challenge isn’t the technology. It’s helping people change how they work.” The observation underscores a recurring reality for large organizations. AI value creation is less constrained by model sophistication than by workflow redesign, organizational readiness, and the ability to embed new systems into existing ways of working.”

Looking Ahead: Strategic Implications

The broader AI ecosystem is racing toward agentic, composable intelligence. Initiatives like the Agentic AI Foundation, which aims to standardize protocols across vendors, underscore a parallel trend toward interoperability and shared standards.

In this context, Kimi K2.5 isn’t just a new model. It’s a statement about where the industry is headed: toward systems that reason, act, and scale autonomously, across modalities and organizational boundaries.

It signals that leadership in AI will depend not just on access to compute or data, but on how effectively organizations orchestrate intelligence at scale, in ways that align with business goals and governance realities.

Conclusion

Kimi K2.5 is a sophisticated, aggressively engineered milestone in the evolution of open-source AI. It pushes the boundary of what open systems can do in vision, coding, and autonomous task orchestration.

The release of Kimi K2.5 amplifies a critical insight: the future of enterprise AI lies in orchestration as much as capability.

AITech Insights Perspective: Why Kimi K2.5 Matters Beyond the Model

From a market traction perspective, Moonshot AI enters this release with measurable momentum. Consumer-facing monetization has shown exponential growth, with global paid users increasing at an average month-over-month rate of over 170 percent between September and November 2025.

Over the same period, API revenue grew fourfold following the launch of Kimi K2 Thinking, indicating growing enterprise and developer adoption ahead of the K2.5 release.

More notably, the model is architected to scale execution through self-directed agent swarms of up to 100 sub-agents operating in parallel across as many as 1,500 coordinated steps.

This shift from scaling model size to scaling coordinated execution reflects Moonshot AI’s emphasis on real-world task completion under real-world time constraints, rather than benchmark performance alone.

From an enterprise lens, this matters for one reason:

Most high-value business work is parallel, messy, and multi-disciplinary. Strategy decks, compliance reviews, product launches, financial modeling, front-end development.

They consist of interdependent workstreams coordinated across teams and functions. AI systems designed for parallel execution are inherently better suited to support this structure.

FAQs

1. What actually changes with Kimi K2.5 compared to earlier open-source models?

The shift is architectural, not cosmetic. Kimi K2.5 treats coordination as a first-class problem. Not just better reasoning or multimodal inputs, but parallel execution across agents. That aligns more closely with how real enterprise work happens.

2. Why should enterprise leaders care about agent swarms instead of stronger single models?

Because most enterprise work is not sequential. It is concurrent, interdependent, and messy. A stronger single agent still bottlenecks. Swarms trade elegance for throughput. That is usually the right trade at scale.

3. Does vision-based coding materially change software delivery timelines?

Yes, but unevenly. It compresses front-end and UI-heavy cycles dramatically, especially where design artifacts dominate. It does less for deeply abstract backend logic. Leaders should expect asymmetry, not blanket acceleration.

4. Is open-source AI like Kimi K2.5 ready for regulated enterprise environments?

Technically, increasingly yes. Operationally, not by default. Open weights shift responsibility onto the buyer. Governance, auditability, and failure attribution become internal problems. Some organizations are prepared for that. Many are not.

5. What is the real risk enterprises underestimate with agent-based AI systems?

Coordination debt. More agents mean more state, more edge cases, and harder traceability when things go wrong. Productivity gains arrive early. Control costs arrive later. Mature teams plan for both.

Discover the future of AI, one insight at a time – stay informed, stay ahead with AI Tech Insights.

To share your insights, please write to us at info@intentamplify.com