Artificial intelligence trends in 2026 are pushing leadership teams toward a more structural question: where does AI actually sit within the operating model?

That question now carries more weight than any individual model release. Organizations reporting measurable business impact are no longer treating AI as a digital initiative or an innovation program. They are integrating it as operational infrastructure, closer to cloud capacity planning than to software deployment.

Moving a system into production forces decisions that pilots avoid: budget ownership, security review, auditability, and operational accountability. A prototype lives inside a team. A production system lives inside the company’s risk posture.

Enterprises abandon experiments all the time. They rarely operationalize something they expect to remove six months later. The moment AI enters a production workflow, it stops being an innovation initiative and becomes part of how work gets done.

The trends below reflect the pressures now reaching senior leadership, regardless of whether AI was originally a strategic priority.

1. The Real Shift: AI Is Becoming Operational Infrastructure

The first wave of enterprise AI centered on copilots and productivity boosts. Helpful, but strategically ambiguous. The second wave is integration into workflows that were previously considered non-automatable.

McKinsey’s 2025 State of AI global survey reveals that organizations experiencing a meaningful business impact from AI are significantly more likely to have fundamentally redesigned their core workflows, rather than treating AI as an isolated tool.

Organizations with measurable value were nearly three times as likely as others to embed AI into operational processes tied to revenue and risk outcomes

Productivity gains scale linearly. Operational redesign scales nonlinearly. A 15% productivity boost rarely impacts an enterprise’s valuation. A faster underwriting cycle does.

Once AI participates in a workflow, accountability questions appear immediately. Who approved the decision, the model, or the employee? Most organizations still do not have an answer.

2. Small Models, Not Bigger Models, Are Driving Enterprise Adoption

Public discussion still obsesses over larger foundation models. Enterprises are quietly doing the opposite.

Since mid-2024, companies have moved toward domain-specific models and retrieval-augmented systems because they are predictable. Open-ended reasoning systems remain powerful but operationally unstable. CFOs dislike non-deterministic cost curves.

Gartner forecasts that organizations will use small, task-specific AI models at least three times more than general-purpose large language models in enterprise workflows, driven by the need for higher accuracy, lower cost, and predictable performance.

“The variety of tasks in business workflows and the need for greater accuracy are driving the shift towards specialized models fine-tuned on specific functions or domain data,” said Sumit Agarwal, VP Analyst at Gartner.

Security teams in particular prefer constrained systems. A small model trained on internal documentation is auditable. A general foundation model is not. Hallucination risk becomes a governance risk.

There is a trade-off. Narrow systems are less flexible and require ongoing data engineering investment. But executives are increasingly choosing reliability over capability. Quietly.

3. The Security Conversation Flipped

Security operations centers now face a scale problem. The number of alerts has grown faster than analyst capacity for nearly a decade. According to IBM’s 2024 Cost of a Data Breach Report, organizations with extensive AI-driven security automation identified and contained breaches 108 days faster on average than organizations without it.

CISOs are no longer debating whether to use AI. They are debating how to supervise it.

AI is effective at triage but poor at accountability. An AI can flag suspicious behavior across billions of logs, but when it blocks a legitimate user or vendor system, the operational impact lands immediately on leadership. False positives become business outages.

This is why governance frameworks are emerging faster than model standards. NIST’s AI Risk Management Framework 1.0, updated in 2024, is being adopted not because regulators require it yet, but because boards want traceability.

4. AI Economics Are Now a Board-Level Issue

One overlooked trend: AI has introduced variable compute cost into knowledge work.

Cloud costs were predictable. Software licenses were predictable.

Inference costs are neither.

Microsoft’s own financial disclosures show how quickly AI demand is moving from experimentation to infrastructure. In its FY2025 Q2 earnings release, the company reported Azure and other cloud services revenue growing 31% year-over-year and explicitly tied ongoing investment to cloud and AI infrastructure expansion.

“We are innovating across our tech stack and helping customers unlock the full ROI of AI to capture the massive opportunity ahead,” said Satya Nadella, chairman and chief executive officer of Microsoft. “Already, our AI business has surpassed an annual revenue run rate of $13 billion, up 175% year-over-year.”

Organizations are now ranking workflows based on value density. Customer support automation may reduce labor costs, but real-time risk modeling in financial transactions protects revenue directly. The second receives budget priority.

AI strategy is, therefore, becoming a capital allocation strategy.

5. Regulation Is Shaping Architecture More Than Innovation

Executives often view regulation as a future concern. In AI, it is a present design constraint.

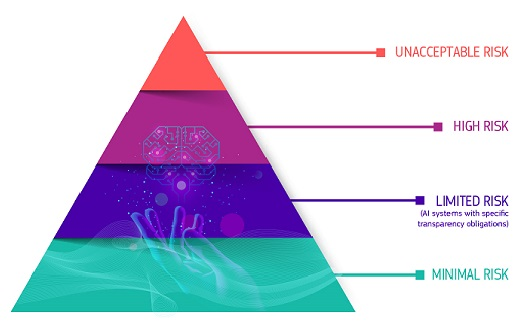

The EU AI Act, finalized in 2024 and entering phased enforcement through 2026, requires documentation, transparency, and risk classification for certain AI applications. Even U.S. companies with European customers must adapt. Many already are.

The practical result: explainability is now an engineering requirement. Not optional. Not academic.

Models used in credit decisions, hiring, medical triage, or fraud detection increasingly need auditability. Black-box systems, even highly accurate ones, struggle in regulated contexts. That is one reason retrieval-augmented architectures have surged. They can cite sources.

The paradox is clear. The most powerful models are often the least governable. Enterprises are choosing governability.

Operating Model Changes Leadership Must Make

Leadership teams should identify three to five operational decisions where faster judgment materially changes outcomes. Then redesign the workflow around AI assistance, human supervision, and audit logging simultaneously. Most companies still implement those sequentially. That sequencing is why pilots stall.

Production systems require ownership, escalation paths, and auditability. Those are management structures, not technical ones. Companies waiting for more reliable models are solving the wrong problem. The limiting factor is whether leadership is willing to let decisions be partially produced by systems that must also be governed.

AI will not fail inside enterprises because the technology is immature. It will fail where accountability structures never changed.

FAQs

1. Is AI a cost-reduction tool or a revenue-generation capability for enterprises?

AI that only automates support or documentation saves money. AI embedded in underwriting, pricing, fraud detection, supply planning, or risk forecasting changes how quickly the company reacts to market conditions. That affects revenue quality, not just expense.

2. Where should AI sit in an enterprise org structure: IT, data, or business operations?

IT should secure and maintain it, but business units must own outcomes because AI participates in decisions. When AI lives only in IT, it remains a tool. When it lives in operations, it becomes part of how the company runs.

3. Are large language models necessary for enterprise AI adoption?

Large models help interfaces and knowledge access, but forecasting, risk scoring, anomaly detection, and workflow automation usually rely on smaller or retrieval-based systems that are cheaper, auditable, and more predictable.

4. What is the biggest risk of deploying AI in business workflows?

Errors matter, but the real issue is knowing who approved an AI-assisted decision and how it can be audited. Without clear ownership, companies face legal, regulatory, and operational exposure even when the model performs well.

5. How should leadership prioritize AI investments in 2026?

Instead of piloting chatbots everywhere, leaders should target workflows where faster judgment changes financial outcomes. Examples include credit decisions, supply chain adjustments, dynamic pricing, claims handling, and fraud prevention. If the workflow affects risk or revenue, it deserves priority.

Discover the future of AI, one insight at a time – stay informed, stay ahead with AI Tech Insights.

To share your insights, please write to us at info@intentamplify.com