Weather forecasting has always been a compromise between physics, compute, and time. Better forecasts require bigger machines, longer runtimes, and deeper institutional expertise. National meteorological agencies built around this logic.

Similarly, energy markets, insurers, and others who relied on weather as a primary input also suffered. The system worked. It just did not scale well, and it never scaled evenly.

What has changed is not that AI suddenly “beat” physics.

What changed is that AI-based forecasting has crossed a threshold where operational viability matters more than theoretical purity. That shift is uncomfortable for parts of the atmospheric science community. It should be. However, it is also irreversible.

Scaling Real-Time Infrastructure Forecasts With GPUs

What makes this shift operationally viable is not the models alone, but the infrastructure beneath them.

NVIDIA underpins much of the modern AI weather stack by enabling rapid training, large ensemble generation, and low-latency inference at energy-efficient scales.

GPU-accelerated architectures allow forecasting systems to iterate in hours instead of days, update continuously as new data arrives, and run locally where decisions are made.

That combination changes who can deploy advanced weather intelligence and how tightly forecasts can be coupled to real-time operational systems.

Powered by accelerated computing platforms such as NVIDIA’s, AI forecasting enables faster iteration and sovereign, purpose-built systems.

The article explores the technical, governance, and geopolitical consequences of treating weather forecasting as infrastructure rather than science alone.

Lowering the Barrier to Operational Weather AI

A key indicator that AI weather forecasting is moving beyond research environments is the emergence of open, end-to-end platforms designed to support the full forecasting lifecycle.

From ingesting observational data to producing medium-range global forecasts and high-resolution local nowcasts.

One prominent example is NVIDIA Earth-2, which frames weather and climate AI as deployable infrastructure rather than isolated models. Instead of focusing solely on forecast accuracy, the platform emphasizes accessibility.

Pretrained models, open frameworks, customization workflows, and accelerated inference tools are designed to run on organizational or national infrastructure, not just centralized supercomputers.

AI Weather Forecasting Crosses the Operational Threshold

Physics-based NWP systems are extraordinarily good at representing the atmosphere. They are also brittle. Expensive to run. Slow to iterate. Difficult to localize without deep expertise.

When they fail, they fail gracefully. When they lag, they lag everywhere.

AI systems invert that profile.

They are fast. Cheap to rerun. Easy to fine-tune for specific geographies or use cases. They are also opaque, sensitive to data bias, and capable of failing in ways that are harder to diagnose.

That trade-off is not theoretical. It shows up in real operations.

Operational AI Changes the Forecasting Equation

One early signal of this shift is the emergence of AI-native forecasting systems already operating alongside traditional NWP in production settings.

Deep learning models such as GraphCast and FourCastNet have demonstrated short-range forecast skill comparable to, and in some cases exceeding, leading global models on select variables, while running orders of magnitude faster.

Google DeepMind states that “GraphCast makes forecasts at the high resolution of 0.25 degrees longitude/latitude (28km x 28km at the equator). That’s more than a million grid points covering the entire Earth’s surface.”

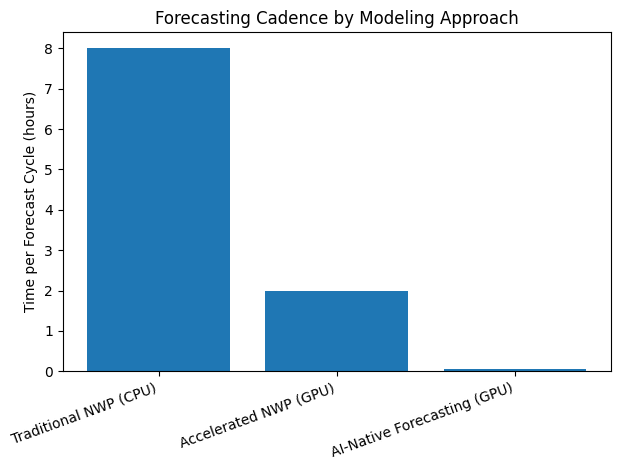

What matters operationally is not the leaderboard result, but the cadence. These systems can be retrained frequently, generate large ensembles on demand, and be deployed on GPU clusters without access to national supercomputing centers.

That capability has made AI forecasts viable not just for global centers, but for grid operators, commodity desks, and regional agencies that previously consumed weather as a static external input rather than a continuously updated control signal.

Why the Future of Weather Forecasting Is No Longer Academic

Traditional models treat resolution as a grid problem. Smaller grid cells equal better forecasts, if you can afford them. AI models operate in compressed latent representations, reconstructing high-resolution fields only at inference time.

You are no longer resolving every physical interaction explicitly. You are learning which interactions matter most for the forecast horizon you care about.

That works remarkably well for many variables. It also creates blind spots, particularly for rare or structurally novel events.

In other words, AI forecasting does not fail where physics fails. It fails differently.

That distinction matters when these systems are used for emergency response, infrastructure planning, or regulatory decisions. Faster is not always safer. Cheaper is not always better.

From Supercomputers to Software

Perhaps the most consequential shift is not technological at all. It is geopolitical.

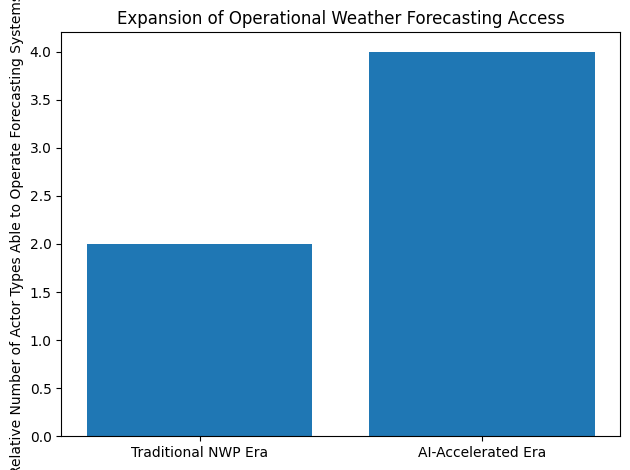

As AI forecasting pipelines become modular and affordable, more countries and organizations can build sovereign forecasting systems. Not copies of global centers. Purpose-built stacks optimized for local needs, local data, and local risk profiles.

This decentralization weakens the historical hierarchy of global forecasting. It also complicates coordination.

The weather does not respect borders. Forecasting systems increasingly do.

The next decade will test whether distributed AI forecasting leads to healthy redundancy or fragmented truth. Probably both.

Weather Forecasting Enters Its Platform Era

AI forecasting thrives on scale. Data scale. Model scale. Ensemble scale. Weather risk, however, is local, contextual, and political.

The same system that improves grid stability can be used to optimize commodity trading. The same probabilistic forecast that helps emergency planners can be weaponized for financial advantage.

Openness amplifies both outcomes.

There is no neutral deployment of weather intelligence. This is not an argument against AI forecasting. It is an argument against pretending it is just another software upgrade.

Where This is Heading

The future of weather forecasting will not be a clean handoff from physics to AI. It will be a messy hybrid.

Physics-based models will remain the backbone for climate-scale understanding and long-horizon stability. AI systems will increasingly dominate short- and medium-range decision-making, especially where speed and customization matter.

The organizations that win will not be the ones with the “best model.” They will be the ones who understand where each approach breaks, and design systems that fail predictably rather than optimally.

Weather forecasting is becoming infrastructure. AI is becoming unavoidable. The hard work now is not building faster models. It is deciding how much uncertainty we are willing to operationalize, and who bears the cost when those uncertainties surface.

The AITech Insights Analysis for NVIDIA Earth-2

NVIDIA Earth-2 is less a breakthrough in forecast accuracy and more a structural shift in how weather intelligence is produced, governed, and deployed.

Earth-2 reframes weather forecasting as operational infrastructure rather than a centralized scientific service. Instead of assuming access to national supercomputing centers, it is designed to run on organizational or sovereign GPU infrastructure.

That change lowers the barrier for energy operators, grid managers, insurers, and public agencies to move from passively consuming forecasts to actively operating forecasting systems tailored to their own risk environments.

From a governance standpoint, this approach accelerates decentralization. Forecasting capability is no longer concentrated in a small number of global institutions. Instead, it becomes modular, portable, and locally controllable. That improves responsiveness and resilience, but it also fragments shared situational awareness.

Technically, Earth-2 reflects a broader industry move toward probabilistic, ensemble-first forecasting. GPU acceleration makes large ensembles economically viable, enabling operators to reason about uncertainty rather than rely on single deterministic outputs.

AI-driven forecasting systems are opaque, sensitive to data coverage, and prone to degradation outside their training distributions. Treating Earth-2 as infrastructure rather than software makes validation, oversight, and fallback strategies a governance requirement rather than an afterthought.

FAQs

1. Why is AI weather forecasting being treated as critical infrastructure rather than a scientific upgrade?

AI forecasting directly affects grid stability, supply chains, emergency response, and financial markets. Its value lies in speed, localization, and operational integration, not just forecast accuracy.

2. How does GPU-accelerated AI forecasting change real-time decision-making for enterprises?

It enables frequent retraining, rapid ensemble generation, and low-latency inference. This allows organizations to act on continuously updated forecasts instead of static daily models.

3. Can AI weather models realistically replace traditional numerical weather prediction systems?

AI complements rather than replaces physics-based models. It dominates short- to medium-range operational decisions, while traditional systems remain essential for long-term stability and climate understanding.

4. What new risks do AI-driven weather forecasts introduce for regulators and operators?

AI systems fail differently from physics-based models. Their opacity, data sensitivity, and bias risks can complicate accountability, especially in emergency response and regulatory contexts.

5. Why does AI weather forecasting have geopolitical and sovereignty implications for the U.S.?

Lower infrastructure costs allow more regional and private actors to build localized forecasting systems. This decentralizes forecasting power but complicates coordination, standardization, and shared situational awareness.

Discover the future of AI, one insight at a time – stay informed, stay ahead with AI Tech Insights.

To share your insights, please write to us at info@intentamplify.com